Apparently I last posted on this blog on 8th August 2010. That's a pretty long time. I just moved. The study is filled with boxes, though I have managed to tidy up my own bedroom. I've got no wardrobe but I bought this "small wardrobe on wheels" from IKEA (the IKEA PS), which I believe can actually handle all the clothes I actually wear. I'm a little sick of all the unpacking and its currently way too hot for gardening (clearing out the old roots in the soil and replacing some of the clay with more suitable soils).

As I typically do, I read what people have been talking about on the ST Forum this morning (well aware that the editorial team of the forum does a lot of agenda setting). I came across a letter on how the Singapore Tourism Board should factor in cost to evaluate whether an event was successful on some measure ("Going by tourism receipts? Then count the costs of events too", ST Forum, 18 Dec 2010). This is makes complete sense to any business owner: perhaps the two most important numbers are cost and revenue. I am typically critical of bad project management, which I believe the huge Youth Olympic Games (YOG) budget overruns amounted to (S$387 million over a budget of S$105 million, with about 70% or S$260 million going to local expenditures which should be relatively easy to budget for). However, as anyone who has played games such as majong should know, to get good at something one needs to "pay tuition". In majong that tuition entails losing money as you learn the niceties of the game and how to read your opponents; in large event organization, it is experiencing the unforeseen, paying for it and learning.

Singapore's narrative is one of surviving. Being small, this entails punching way above our weight. We have done this militarily. In fact we die this in a very intelligent manner, building up fast enough to gain security but quietly enough to avoid reprisals (it also helped that we were between Malaysia and Indonesia who were jostling for power). In this day and age however, survival is a matter of maintaining relevance to the world rather than avoiding annexation. Again we have to punch above our weight.

Our drive to become a world X hub (where X might be "biomedical", "logistics", "water technology", etc) is an indicator of sound strategic judgment on the part of the government. While I feel that the efforts are lacking in terms of execution, the strategy will work as people learn from experience. Organizing large events falls neatly into this framework, and like most of Singapore's major efforts, it seems that there is "tuition" to be paid. The magnitude of the "fees payable" have been alarming (c.f. YOG), there are grounds to argue (shaky though they might be) that when operating on a totally different scale, things change and past experiences are no longer such a useful guide. An organizational structure good for an organization of 100 staff will probably not be as effective at 1000 staff. Whether this holds or not, we are paying tuition to learn, what has to be done is to ensure that we are learning.

It is natural that initial cash flows are negative in a large project, however the net present value of the project should be positive. It is key that our event organizers not be so embarrassed about operating losses that all the lessons learnt are swept under the carpet. These experiences are what we have paid for. I believe the organizers should, with the public and the services sector, pick apart the execution of past large event to distill the experiences that we have paid so much for. This way, we may start getting positive numbers in our cash flows and turn "Large Event Organization" into a profit generating machine.

Now back to the work of unpacking.

Saturday, December 18, 2010

Sunday, August 8, 2010

We Need a Clean Slate Analysis of the Economy and a Road Map

I've just watched The Yes Men Fixed the World (P2P Edition). The synopsis:

Economies attempt to increase efficiency by progressing up and right within the production possibilities, where one group can be made better off without making anyone else worse off. It's not possible to stray out of the set of production possibilities (those are "possibilities" after all). Economies can only progress along a continuous curve. Therefore to get over the "Humps" in the Pareto frontier, considerable sacrifice may be necessary. This is not easy.

Economies attempt to increase efficiency by progressing up and right within the production possibilities, where one group can be made better off without making anyone else worse off. It's not possible to stray out of the set of production possibilities (those are "possibilities" after all). Economies can only progress along a continuous curve. Therefore to get over the "Humps" in the Pareto frontier, considerable sacrifice may be necessary. This is not easy.

Free market advocates say that economic shock (disasters) are "useful" for producing economic progress. Economic progress, on the above diagram, entails helping getting us over the humps. Disaster based "shock" enables this by shaking the economy so far from the Pareto optimal frontier (into the production possibilities set) that it ends up to the left and below the hump, from which it can progress up and towards a more improved economy. But is "shock" truly the only way?

Future envisionings exist that show, in reasonable detail, how the options for a possible future, given the current state of technology, would be better than those that exist today. On the diagram, technologists in a world of Region 1 or 2 might be able to envision Region 3. The question is how to progress on a continuous trajectory towards it. (Let's not nitpick how the regions are depicted and whether they are the frontier or the area, I used MS Paint to draw it.)

The fact is, it is politically difficult to move towards it. Asking constituents to make sacrifices is difficult. However, it might be possible if there was a (public) road map with projected progress towards that future (over time) being charted.

We need a clean slate analysis of the economy, independent of where we are today, to show where we can go. Then we need a road map to show in detail how we might get there. Finally, we need to move in that direction with will and conviction.

The one political point I want to make: The situation with renewable energy (as brought up by the movie) falls squarely into this category. On 27 Jul 2010, the US House Majority Leader unveiled the "Clean Energy Jobs and Oil Company Accountability Act" with the two most powerful clean energy provisions missing: a cap on carbon emissions from the electric power sector and a national Renewable Electricity Standard, which would require utilities to generate at least 15 percent of their electricity from renewable sources by 2021. No doubt, this was influenced by the old economy energy firms (fossil fuels) that are now rich with the pickings of their full product life cycles and using these resources in a harmful attempt to extend these life cycles via lobbying. The Aug 2 - Aug 8 2010 edition of Bloomberg Businessweek warn that this will result in USA losing out to China in the renewable energy race. (In the above framework, China's economy might be operating at a point left and below its hump, which its status as a "developing" power might support.)

In summary, a clean slate analysis reveals the possibility and what we must sacrifice, over some time horizon, to get there. A road map is a plan to get there. Political will and awareness of where we are going will get us there. Visibility of where we at all times in the process are will help maintain that will.

- THE YES MEN FIX THE WORLD is a screwball true story about two gonzo political activists who, posing as top executives of giant corporations, lie their way into big business conferences and pull off the world's most outrageous pranks. This peer-to-peer special edition of the film is unique: it is preceded by an EXCLUSIVE VIDEO of the Yes Men impersonating the United States Chamber of Commerce.

Economies attempt to increase efficiency by progressing up and right within the production possibilities, where one group can be made better off without making anyone else worse off. It's not possible to stray out of the set of production possibilities (those are "possibilities" after all). Economies can only progress along a continuous curve. Therefore to get over the "Humps" in the Pareto frontier, considerable sacrifice may be necessary. This is not easy.

Economies attempt to increase efficiency by progressing up and right within the production possibilities, where one group can be made better off without making anyone else worse off. It's not possible to stray out of the set of production possibilities (those are "possibilities" after all). Economies can only progress along a continuous curve. Therefore to get over the "Humps" in the Pareto frontier, considerable sacrifice may be necessary. This is not easy.Free market advocates say that economic shock (disasters) are "useful" for producing economic progress. Economic progress, on the above diagram, entails helping getting us over the humps. Disaster based "shock" enables this by shaking the economy so far from the Pareto optimal frontier (into the production possibilities set) that it ends up to the left and below the hump, from which it can progress up and towards a more improved economy. But is "shock" truly the only way?

Future envisionings exist that show, in reasonable detail, how the options for a possible future, given the current state of technology, would be better than those that exist today. On the diagram, technologists in a world of Region 1 or 2 might be able to envision Region 3. The question is how to progress on a continuous trajectory towards it. (Let's not nitpick how the regions are depicted and whether they are the frontier or the area, I used MS Paint to draw it.)

The fact is, it is politically difficult to move towards it. Asking constituents to make sacrifices is difficult. However, it might be possible if there was a (public) road map with projected progress towards that future (over time) being charted.

We need a clean slate analysis of the economy, independent of where we are today, to show where we can go. Then we need a road map to show in detail how we might get there. Finally, we need to move in that direction with will and conviction.

The one political point I want to make: The situation with renewable energy (as brought up by the movie) falls squarely into this category. On 27 Jul 2010, the US House Majority Leader unveiled the "Clean Energy Jobs and Oil Company Accountability Act" with the two most powerful clean energy provisions missing: a cap on carbon emissions from the electric power sector and a national Renewable Electricity Standard, which would require utilities to generate at least 15 percent of their electricity from renewable sources by 2021. No doubt, this was influenced by the old economy energy firms (fossil fuels) that are now rich with the pickings of their full product life cycles and using these resources in a harmful attempt to extend these life cycles via lobbying. The Aug 2 - Aug 8 2010 edition of Bloomberg Businessweek warn that this will result in USA losing out to China in the renewable energy race. (In the above framework, China's economy might be operating at a point left and below its hump, which its status as a "developing" power might support.)

In summary, a clean slate analysis reveals the possibility and what we must sacrifice, over some time horizon, to get there. A road map is a plan to get there. Political will and awareness of where we are going will get us there. Visibility of where we at all times in the process are will help maintain that will.

Monday, July 5, 2010

Road Congestion: Physical Limits vs Information-based Orchestration

On 1 July, the Straits Times published a report titled "ERP system: From gantries to satellites" describing LTA's intent to invite technology firms to test such a system next year.

The article notes that the new system "will allow LTA to extend its ERP coverage to congested roads anywhere on the island, without having to install more gantries, which cost about $1.5 million each" and that "the system can be adjusted so that motorists pay only if they are approaching a section of congested road. The in-vehicle unit can also be used to notify them that they are about to enter a stretch of road that attracts a charge".

However, a rudimentary analysis of our transportation system would lead one to conclude that given its current characteristics (that is to say: road network, vehicles on the road, etc), road congestion is highly insensitive to information-based methods of congestion reduction such as ERP.

There are physical limitations to the level of throughput a given infrastructure can provide. Even if we were capable of optimally orchestrating traffic (centrally routing all vehicles to ensure optimal performance for the transportation system), a network of heavily jammed roads would be better ameliorated by, for instance, widening a few major roads or reducing the number of vehicles on the road.

In fact, a study of the major means of transportation (bus, train, private vehicle) would clearly show that the current transport infrastructure is operating at or close to its limits. The promotion of information-based systems in tune with building an "information economy", but we should not lose track of reality, bearing in mind that it is the physical system providing the service. When a system is operating near its limits, its capacity should be increased or its load decreased. Only where an information system can be shown to provide a clear improvement in throughput should any investment be made. Currently, both satallite and land-based ERP fail this test.

Contrary to the claims of the July 1 report, it is not the gantries that have hit their limits, it is the road network.

The article notes that the new system "will allow LTA to extend its ERP coverage to congested roads anywhere on the island, without having to install more gantries, which cost about $1.5 million each" and that "the system can be adjusted so that motorists pay only if they are approaching a section of congested road. The in-vehicle unit can also be used to notify them that they are about to enter a stretch of road that attracts a charge".

However, a rudimentary analysis of our transportation system would lead one to conclude that given its current characteristics (that is to say: road network, vehicles on the road, etc), road congestion is highly insensitive to information-based methods of congestion reduction such as ERP.

There are physical limitations to the level of throughput a given infrastructure can provide. Even if we were capable of optimally orchestrating traffic (centrally routing all vehicles to ensure optimal performance for the transportation system), a network of heavily jammed roads would be better ameliorated by, for instance, widening a few major roads or reducing the number of vehicles on the road.

In fact, a study of the major means of transportation (bus, train, private vehicle) would clearly show that the current transport infrastructure is operating at or close to its limits. The promotion of information-based systems in tune with building an "information economy", but we should not lose track of reality, bearing in mind that it is the physical system providing the service. When a system is operating near its limits, its capacity should be increased or its load decreased. Only where an information system can be shown to provide a clear improvement in throughput should any investment be made. Currently, both satallite and land-based ERP fail this test.

Contrary to the claims of the July 1 report, it is not the gantries that have hit their limits, it is the road network.

Friday, May 7, 2010

On Simulations and the Future

Simulations are interesting things. They are the business world's stock tool for understanding and conducting dry dry runs of proposed operational plans. They have little of the analytical beauty of the analytical trajectory of a dynamical system, but businessmen do not bother about matters like that.

The question is whether simulation is a tool that will be able to cope with future demands of it, in view of the future. This little note seeks to highlight some thoughts I had while discussing the matter on the MIT alumni Linkedin discussion board.

Computational Workload

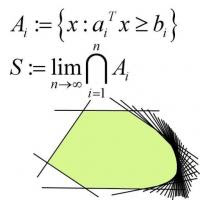

Computational complexity is always a problem. Even when simulating rational human decision making, let's say where a simulated agent has to "make a choice" the simulation solves a convex optimization problem (may be a linear program), solves a dynamic programming problem, or perhaps uses some search algorithm to arrive at a decision. This can be computationally taxing.

Where things are probabilistic, you need about 26500 monte carlo samples to ensure that the probability that the empirical distribution resulting from the simulation is more than 1% off is at most 1%. That is painful.

I recall that on one of the evenings when I was heading home from campus on the "Big Silver Van" one of my housemates quipped that it was interesting how things deemed to be interesting were usually at least in the NP complexity class. This probably would summarize much of the difficulties in embedding decision making into simulations.

Complexity issues hurt and linear speedups and improvements do not look to help as the demand is for growing sophistication. A successful simulation study requires discipline in modeling to avoid being swamped by complexity and ending in failure.

Business Matters

In the business world, simulations make sense of the effects of a proposed set of operations under some dynamics and under uncertainty. Indeed, projects these days tend to phrase requirements to the tone of "... shall report the service level with 99% accuracy" which is an appeal to the use of monte carlo algorithms rather than simulations of the single run deterministic variety.

Of course the bulk of simulations in engineering remain the "this is my structure, here are my loads, will it break?" type, but to a very large extent, problems in that regime tend to be of "low complexity" in terms of the rate at which workload scales with desired accuracy.

An interesting point was also raised on the board:

The Customary Conclusion

Simulation has its work cut out for it, its future success requiring control of both computational complexity and human interface complexity. It will be interesting to see how things go.

The question is whether simulation is a tool that will be able to cope with future demands of it, in view of the future. This little note seeks to highlight some thoughts I had while discussing the matter on the MIT alumni Linkedin discussion board.

Computational Workload

Computational complexity is always a problem. Even when simulating rational human decision making, let's say where a simulated agent has to "make a choice" the simulation solves a convex optimization problem (may be a linear program), solves a dynamic programming problem, or perhaps uses some search algorithm to arrive at a decision. This can be computationally taxing.

Where things are probabilistic, you need about 26500 monte carlo samples to ensure that the probability that the empirical distribution resulting from the simulation is more than 1% off is at most 1%. That is painful.

I recall that on one of the evenings when I was heading home from campus on the "Big Silver Van" one of my housemates quipped that it was interesting how things deemed to be interesting were usually at least in the NP complexity class. This probably would summarize much of the difficulties in embedding decision making into simulations.

Complexity issues hurt and linear speedups and improvements do not look to help as the demand is for growing sophistication. A successful simulation study requires discipline in modeling to avoid being swamped by complexity and ending in failure.

Business Matters

In the business world, simulations make sense of the effects of a proposed set of operations under some dynamics and under uncertainty. Indeed, projects these days tend to phrase requirements to the tone of "... shall report the service level with 99% accuracy" which is an appeal to the use of monte carlo algorithms rather than simulations of the single run deterministic variety.

Of course the bulk of simulations in engineering remain the "this is my structure, here are my loads, will it break?" type, but to a very large extent, problems in that regime tend to be of "low complexity" in terms of the rate at which workload scales with desired accuracy.

An interesting point was also raised on the board:

- "When a sim can let me add and subtract new variables as quickly and easily as a spreadsheet, then I get interested."

The Customary Conclusion

Simulation has its work cut out for it, its future success requiring control of both computational complexity and human interface complexity. It will be interesting to see how things go.

Friday, April 2, 2010

On Education: KPIs and Human Behavior

In view of recent comments in the ST forum on education ("Remember the true value of schools", "Reward effort as much as results", etc), I'd like to make a proposal coming from a slightly less orthodox angle.

To cut a long story short, based on their observed actions, teacher KPIs appear to be based on a test score metric. Management often only sees numbers and assumes that all is well. However, proxy metrics are only proxies, and in extreme situations they may lose their association with the value they purport to represent.

No matter what people claim about the public-spirited virtue of educators, they are human — intelligent ones at that — and respond very keenly and creatively to incentives. The pursuit of rankings and test scores is a natural response to KPIs being set in that direction. The unintended fallout are, unfortunately, the well-established phenomena of forcing students to drop subjects they are less than excellent at, the trivialization of non-classroom activities and the subordination of such activities to those of a more test-relevant nature. It was almost as if educators were told: "This is the syllabus; and this is the rewards and remuneration structure. Now get to work." I understand these statements are blunt and unrefined, but they capture the gist of the situation.

While test scores and other KPI aligned measures have improved greatly, it is a fact that unintended consequences have cropped in due to the process of chasing incentives — a naturally arising optimization process. Here "optimization" is the process of making decisions so as to maximize some measure of performance and or minimize some measure of undesirability subject to certain constraints. As a practitioner of quantitative optimization techniques, I would like to state that optimization is an unintelligent process that has to be guided by an intelligent user. An example from the distant realm of finance may shed more light on the situation. When practiced naively, portfolio optimization performs poorly. This is because, incompletions in the data used to guide the process are maximally exploited. This results in a proposed portfolio that would have performed well in hindsight, but severely under estimates future risk. (The pure naive form is beautiful theoretically but needs to be embellished before it is roadworthy.)

Sociologists have proposed that when dealing in the "economic realm" (with rewards and remuneration, for instance), individuals have a tendency to make cold rational decisions. Analogous to the unintended consequences of our education system, the example from finance shows how omissions are exploited in a raw unfeeling optimization process.

Unintended consequences creep in to any well meaning statement of value. For instance, as much as I love the sentiment behind rewarding the hardworking, the execution of such a policy is literally fraught with (economic) peril. Effort should not be rewarded as much as results, as it gives students an incentive to put on a show instead of directing their efforts at improvement. I believe the well established term is "wayang". Promoting a culture of "wayang" would be counterproductive and harmful to our economy. We cannot give up the desire for a culture of performance, but it is important that the pursuit of a proxy to the abstract notion of "performance" does not retard the achievement of less quantifiable true goal. Scores alone are a poor measure of effectiveness; I'd like to suggest something a little more nuanced.

I'd like to propose using a type of metric that balances various factors such as (student) appraisal (as a proxy to "students feeling they got something out of the class"), captures the tradeoffs involved in working to achieve the various ends of education and promotes the centering of the actions of teachers to give students a more well rounded experience (which some theories of education tout as superior).

In the graphic below, effectiveness has been presented in the form of indifference curves. "Higher curves" indicate greater effectiveness. The green dotted lines indicate that for the "focused" teacher to progress in the assessment scale, there are minimal achievements in each attribute for each assessment level. This combats lopsidedness. The asymmetry in the minimal-effectiveness-for-progression boundaries captures the fact that we are a performance culture, and that must be foremost in the minds of teachers. In this manner, evaluation-optimizing teachers may straddle the green lines, but the evaluation structure limits the damage of their optimization. (In the diagram, only a (10,10) achieves effectiveness level 10, the true master teacher.)

Such a metric may be extended to multiple criteria. The curves here are of the form:

the natural generalization would be

Another practice in optimization has been to constrain solutions to take a particular form; at times to preclude undesirable behavior and at times with the explicit aim of making them robust to the slings and arrows of outrageous fortune. On the latter, it seems that "robustness" to the onslaughts of the real world is well correlated to "experience".

Less quantitatively, I'd also like to mention that there should be requirements for the education process that ensure that students have had a prior "experience set", leaving the system, as evidence of exposure to certain situations. This would increase the likelihood of a "robust response" to changing situations on the part of students. This would of course represent additional teaching workload and the question arises of the marginal benefit of this and whether it should displace something else. This is very much in line with the "Desired Outcomes of Education" expressed by MOE. This is considerably harder to design well.

Set up a well thought-out optimization problem, and make sure your solutions are robust.

To cut a long story short, based on their observed actions, teacher KPIs appear to be based on a test score metric. Management often only sees numbers and assumes that all is well. However, proxy metrics are only proxies, and in extreme situations they may lose their association with the value they purport to represent.

No matter what people claim about the public-spirited virtue of educators, they are human — intelligent ones at that — and respond very keenly and creatively to incentives. The pursuit of rankings and test scores is a natural response to KPIs being set in that direction. The unintended fallout are, unfortunately, the well-established phenomena of forcing students to drop subjects they are less than excellent at, the trivialization of non-classroom activities and the subordination of such activities to those of a more test-relevant nature. It was almost as if educators were told: "This is the syllabus; and this is the rewards and remuneration structure. Now get to work." I understand these statements are blunt and unrefined, but they capture the gist of the situation.

While test scores and other KPI aligned measures have improved greatly, it is a fact that unintended consequences have cropped in due to the process of chasing incentives — a naturally arising optimization process. Here "optimization" is the process of making decisions so as to maximize some measure of performance and or minimize some measure of undesirability subject to certain constraints. As a practitioner of quantitative optimization techniques, I would like to state that optimization is an unintelligent process that has to be guided by an intelligent user. An example from the distant realm of finance may shed more light on the situation. When practiced naively, portfolio optimization performs poorly. This is because, incompletions in the data used to guide the process are maximally exploited. This results in a proposed portfolio that would have performed well in hindsight, but severely under estimates future risk. (The pure naive form is beautiful theoretically but needs to be embellished before it is roadworthy.)

Sociologists have proposed that when dealing in the "economic realm" (with rewards and remuneration, for instance), individuals have a tendency to make cold rational decisions. Analogous to the unintended consequences of our education system, the example from finance shows how omissions are exploited in a raw unfeeling optimization process.

Unintended consequences creep in to any well meaning statement of value. For instance, as much as I love the sentiment behind rewarding the hardworking, the execution of such a policy is literally fraught with (economic) peril. Effort should not be rewarded as much as results, as it gives students an incentive to put on a show instead of directing their efforts at improvement. I believe the well established term is "wayang". Promoting a culture of "wayang" would be counterproductive and harmful to our economy. We cannot give up the desire for a culture of performance, but it is important that the pursuit of a proxy to the abstract notion of "performance" does not retard the achievement of less quantifiable true goal. Scores alone are a poor measure of effectiveness; I'd like to suggest something a little more nuanced.

I'd like to propose using a type of metric that balances various factors such as (student) appraisal (as a proxy to "students feeling they got something out of the class"), captures the tradeoffs involved in working to achieve the various ends of education and promotes the centering of the actions of teachers to give students a more well rounded experience (which some theories of education tout as superior).

In the graphic below, effectiveness has been presented in the form of indifference curves. "Higher curves" indicate greater effectiveness. The green dotted lines indicate that for the "focused" teacher to progress in the assessment scale, there are minimal achievements in each attribute for each assessment level. This combats lopsidedness. The asymmetry in the minimal-effectiveness-for-progression boundaries captures the fact that we are a performance culture, and that must be foremost in the minds of teachers. In this manner, evaluation-optimizing teachers may straddle the green lines, but the evaluation structure limits the damage of their optimization. (In the diagram, only a (10,10) achieves effectiveness level 10, the true master teacher.)

Such a metric may be extended to multiple criteria. The curves here are of the form:

(Effectiveness)2 = (Metric 1) x (Metric 2),

the natural generalization would be

(Effectiveness)n = (Metric 1) x (Metric 2) x ... x (Metric n).

Another practice in optimization has been to constrain solutions to take a particular form; at times to preclude undesirable behavior and at times with the explicit aim of making them robust to the slings and arrows of outrageous fortune. On the latter, it seems that "robustness" to the onslaughts of the real world is well correlated to "experience".

Less quantitatively, I'd also like to mention that there should be requirements for the education process that ensure that students have had a prior "experience set", leaving the system, as evidence of exposure to certain situations. This would increase the likelihood of a "robust response" to changing situations on the part of students. This would of course represent additional teaching workload and the question arises of the marginal benefit of this and whether it should displace something else. This is very much in line with the "Desired Outcomes of Education" expressed by MOE. This is considerably harder to design well.

Set up a well thought-out optimization problem, and make sure your solutions are robust.

Subscribe to:

Posts (Atom)